Welcome to the enthralling world of Python! These minuscule entities are the foundation of Python code, playing a pivotal role in deciphering and interpreting the language. By delving into diverse token types like identifiers, literals, operators, and keywords, you unlock a deeper understanding of Python’s syntax, propelling you toward coding proficiency. Whether you’re a coding novice eager to grasp the basics or a seasoned Python developer aiming to broaden your expertise, this blog equips you with a robust foundation for comprehending tokens in Python. Let the exploration of Python’s programming blocks commence!

Unveiling the Essence of Tokens in Python

Contents

Tokens in Python stand as the smallest meaningful units of code. They constitute the building blocks of Python programs, representing various types of data and operations. Generated by the Python tokenizer, these tokens emerge by dissecting the source code, ignoring whitespace and comments. The Python parser then utilizes these tokens to construct a parse tree, revealing the program’s structure. This parse tree becomes the blueprint for the Python interpreter to execute the program.

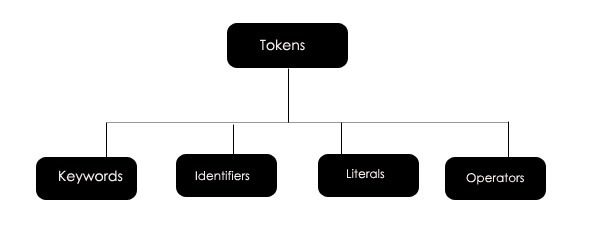

Types of Tokens in Python

In the Python language, understanding the distinct types of tokens is pivotal, as each serves a unique function. Python encompasses various token types, including identifiers, literals, operators, keywords, delimiters, and whitespace. Let’s explore each type’s role in executing a Python script:

1. Identifiers in Python

Identifiers are user-defined names assigned to variables, functions, classes, modules, or any other object. They follow a “snake_case” naming convention, enhancing code readability. Examples of valid identifiers include `my_variable`, `my_function()`, and `_my_private_variable`. Invalid identifiers, like `1my_variable` and `def`, violate naming rules.

2. Keywords in Python

Keywords are reserved words in Python, serving specific syntactic and structural purposes. Python boasts 35 keywords, including `and`, `or`, `if`, `else`, `for`, `while`, `True`, `False`, and `None`. These words hold special significance and cannot be used as identifiers.

3. Literals in Python

Literals represent constant values directly specified in the source code. Python supports various literal types, such as string literals enclosed in single or double quotes, numeric literals (integers, floats, complex numbers), boolean literals (`True` and `False`), and special literals like `None`.

4. Operations in Python

Operators are symbols or special characters that perform tasks on operands. Python’s operators encompass arithmetic, assignment, comparison, logical, identity, membership, and bitwise operators. Examples include `+`, `-`, `=`, `==`, `and`, `or`, `is`, `in`, and `&`.

5. Delimiters in Python

Delimiters are characters or symbols defining the boundaries of different elements in Python code. Parentheses `()`, brackets `[]`, braces `{}`, commas `,`, colons `:`, and semicolons `;` are examples. They group statements, define a function or class bodies, and mark the end of lines.

6. Whitespace and Indentation in Python

Whitespace and indentation are crucial in Python’s syntax. Unlike many languages, Python uses indentation to delineate code blocks. The standard indentation is four spaces, and consistent indentation is vital for code validity and execution.

Tokenizing in Python

Tokenizing, the process of breaking down characters into tokens, is a pivotal step in Python’s compilation and interpretation. The Python tokenizer, or lexer, reads source code character by character, grouping them into tokens based on context. Identifying different token types aids in creating a parse tree, enhancing code understanding and debugging.

How to Identify Tokens in a Python Program

Identifying tokens in Python can be accomplished using the built-in Python tokenizer or a regular expression library. The Python tokenizer, accessed via the `tokenize` module, provides a series of tokens, each represented as a tuple with type and value. Alternatively, a regular expression library can be employed to match token patterns based on defined rules.

Libraries in Python

Several token libraries in Python cater to different needs:

1. NLTK (Natural Language Toolkit):

– Comprehensive NLP library.

– Tools for word tokenization, sentence tokenization, and part-of-speech tagging.

– Suitable for general NLP tasks.

2. SpaCy:

– Known for speed and accuracy.

– Offers various NLP features, including tokenization, part-of-speech tagging, named entity recognition, and dependency parsing.

– Ideal for large datasets and tasks requiring speed.

3. TextBlob:

– Lightweight and user-friendly NLP library.

– Includes tools for word

tokenization, sentence tokenization, and part-of-speech tagging.

– Suitable for small to medium-sized datasets.

4. Tokenize:

– Simple and lightweight library for text tokenization.

– Supports various tokenization schemes.

– Ideal for tasks emphasizing simplicity and speed.

5. RegexTokenizer:

– Powerful tokenizer using regular expressions.

– Allows custom tokenization schemes.

– Suitable for tasks requiring custom tokenization or prioritizing performance.

Conclusion

Tokens serve as the elemental units of code, holding paramount importance for developers and businesses. Proficiency in handling tokens is essential for crafting precise, efficient code and supporting businesses in building dependable software solutions. Mastering tokens in the dynamic Python landscape becomes an invaluable asset for the future of software development and innovation. Embrace the realm of Python tokens to witness your projects flourish.

Frequently Asked Questions (FAQ)

Q: What is the role of tokens in Python programming?

A: Tokens in Python are the smallest units of a program, representing keywords, identifiers, operators, and literals. They are essential for the Python interpreter to understand and process code.

Q: How are tokens used in Python?

A: Tokens are used to break down Python code into its constituent elements, making it easier for the interpreter to execute the code accurately.

Q: Can you provide examples of Python keywords?

A: Certainly! Some common Python keywords include `if`, `else`, `while`, and `for`.

Q: Why is tokenization important in Python?

A: Tokenization is crucial because it helps the Python interpreter understand the structure and syntax of code, ensuring it can be executed correctly.

Q: What should I keep in mind when using tokens in my Python code?

A: When working with tokens, prioritize code readability, follow naming conventions, and be aware of potential token conflicts to write clean and efficient Python code.